What is Data Discovery & Classification?

How can databases be classified?

What are the limitations of this process?

What are the solutions?

Classification is done, now what next?

What is Data Discovery & Classification?

Data Discovery & Classification is a feature built into Azure SQL Database, Azure SQL Managed Instance, and Azure Synapse Analytics. It provides basic capabilities for discovering, classifying, labeling, and reporting the sensitive data in your databases at no additional cost.

Your most sensitive data might include business, financial, healthcare, or personal information. It can serve as infrastructure for:

•Helping to meet standards for data privacy and requirements for regulatory compliance.

•Various security scenarios, such as monitoring (auditing) access to sensitive data.

•Controlling access to and hardening the security of databases that contain highly sensitive data.

Data Discovery & Classification currently supports the following capabilities:

•Discovery and recommendations: The classification engine scans your database and identifies columns that contain potentially sensitive data. It then provides you with an easy way to review and apply recommended classification via the Azure portal.

•Labeling: You can apply sensitivity-classification labels persistently to columns by using new metadata attributes that have been added to the SQL Server database engine. This metadata can then be used for sensitivity-based auditing scenarios.

•Query result-set sensitivity: The sensitivity of a query result set is calculated in real time for auditing purposes.

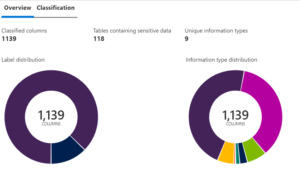

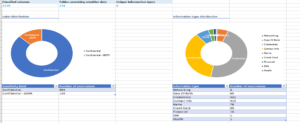

•Visibility: You can view the database-classification state in a detailed dashboard in the Azure portal. Also, you can download a report in Excel format to use for compliance and auditing purposes and other needs.

Default Classification Labels and Types.

The classification includes two metadata attributes:

•Labels: The main classification attributes, used to define the sensitivity level of the data stored in the column.

1.Public

2.General

3.Confidential

4.Confidential – GDPR

5.Highly Confidential

6.Highly Confidential – GDPR

•Information types: Attributes that provide more granular information about the type of data stored in the column.

•Banking Health

•National ID Credentials

•Networking Credit card

•SSN Date of birth

•Financial Other

•Name Contact Info

How can databases be classified?

The default data classification information types and labels are stored in SQL Information Protection Policy file. When we check for recommendations for classifications or want to apply them, it refers to this file. Microsoft does provide the ability for database administrators to modify this policy (labels and information types) and any changes done will reflect in the data classifications. But we will talk about this a little later and its use-cases.

Classifications can be managed via 2 methods:

T-SQL

Powershell cmdlets

From an automation perspective, when we need to apply the classifications for hundreds of databases or more the Azure PowerShell cmdlets come very handy, and I will focus on that.

We can use PowerShell Cmdlet to add or remove column classifications, as well as retrieve all classifications and get recommendations for the entire database.

Get-SqlSensitivityClassification

Get-SqlSensitivityRecommendations

Set-SqlSensitivityClassification

Remove-SqlSensitivityClassification

Here is a code snippet which loops through all subscriptions under a tenant, finds all resources of type- SQL Server and then loops through all databases (based on certain filters) and then first gets the recommended classifications and then applies them via the above Az PowerShell cmdlets.

$AzureSQLClassStatus1 = @()

$subscriptions = Get-AzSubscription

foreach ($sub in $subscriptions)

{

$sub | Set-AzContext | Out-Null

$AzureSQLServers =Get-AzResource |Where-Object ResourceType -EQ Microsoft.SQL/servers

foreach ($AzureSQLServer in $AzureSQLServers)

{

$AzureSQLServerDataBases = Get-AzSqlDatabase -ServerName $AzureSQLServer.Name -ResourceGroupName $AzureSQLServer.ResourceGroupName | Where-Object {($_.DatabaseName -ne "master") -and ($_.DatabaseName -like "*PROD")} | Where-Object {$_.SecondaryType -ne "Geo"}

foreach ($AzureSQLServerDataBase in $AzureSQLServerDataBases)

{

$svName =$AzureSQLServerDataBase.ServerName

$DBName =$AzureSQLServerDataBase.DatabaseName

$ResourceGroupName= $AzureSQLServer.ResourceGroupName

Write-Output "Applying Classifications for database:" $DBName

$classifications=Get-AzSqlDatabaseSensitivityRecommendation -ResourceGroupName $ResourceGroupName -ServerName $svName -DatabaseName $DBName |Set-AzSqlDatabaseSensitivityClassification

$appliedclassifications=Get-AzSqlDatabaseSensitivityClassification -ResourceGroupName $ResourceGroupName -ServerName $svName -DatabaseName $DBName

foreach ($class in $appliedclassifications)

{

$AzureSQLClassStatus = [PSCustomObject]@{

'ResourceGroupName' = $ResourceGroupName

'Database Server' = $svName

'Database Name' = $DBName

'Sensitivity Labels'=(@($class.SensitivityLabels) -join ',')

}

$AzureSQLClassStatus1 += $AzureSQLClassStatus

}

}

}The applied data classifications can be viewed either from Azure Portal or as a report from SSMS for a SQL Sever database.

What are the limitations of this process?

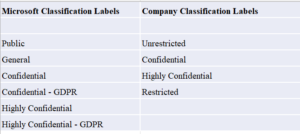

1.Mismatch of classification labels provided by Microsoft with that of an individual company’s IT Security Policy

Microsoft’s classification labels are intended to be a good starting point for organizations who need to classify their data, however it cannot be expected to match completely with individual’s company’s IT Security Policy which is often dictated by the nature of its business. For example, as shown in the picture above, this company has more restrictive data classification labels than that provided by Microsoft.

2.Accuracy of classifications

Search pattern strings use keywords with wildcard characters (using the character ‘%’), which the automated discovery engine uses to identify sensitive data in your databases, based on the columns’ metadata. This means there could be columns classified as sensitive even though they might not have any sensitive data or vice-versa. For example, if a company stores client address, client phone number and client email address in columns named text1, text2 and text3 respectively, the discovery engine would not pick it up.

What are the solutions?

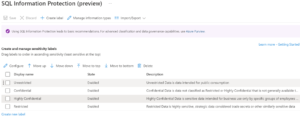

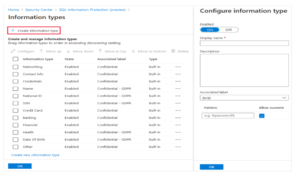

Azure provides the option to customize the Data classification Labels and Information types via a feature called SQL information protection policy.

SQL information protection’s data discovery and classification mechanism provides advanced capabilities for discovering, classifying, labeling, and reporting the sensitive data in your databases. You can customize the policy, according to your organization’s needs. This is available under Microsoft defender for cloud and when selected, it applies at the tenant level.

For example, Company XYZ Pvt Ltd has a certain type of client data related to customer questions, answers, feedback and comments which doesn’t fall into any of the default information types and hence doesn’t get classified as sensitive. However, exposure of this data to unauthorized public can lead to a data breach.

To overcome this issue, we can create a new Information type- Client Content and associated it with ‘Highly Confidential’ Label.

In-order to overcome the issue of over-estimation (classifying data as sensitive but is not), a manual review of all the tables of the database can be done and then PowerShell cmdlets can be run to remove these incorrect classifications and/or add new ones. Once the review is completed, the in-accurate classifications can be removed using either PowerShell or T-SQL commands.

Get-AzSqlDatabaseSensitivityClassification -ResourceGroupName resourceGroup -ServerName server -DatabaseName database | Remove-AzSqlDatabaseSensitivityClassification

DROP SENSITIVITY CLASSIFICATION FROM schema.Tablename.Columnname

Classification is done, now what next?

Auditing

An important aspect of the classification is the ability to monitor access to sensitive data. Azure SQL Auditing has been enhanced to include a new field in the audit log called data_sensitivity_information. This field logs the sensitivity classifications (labels) of the data that was returned by a query.

These are the activities that are auditable with sensitivity information:

ALTER TABLE … DROP COLUMN

BULK INSERT

DELETE,INSERT

MERGE,UPDATE

UPDATETEXT,WRITETEXT

DROP TABLE,BACKUP

DBCC CLONEDATABASE

SELECT INTO

INSERT INTO EXEC

TRUNCATE TABLE

DBCC SHOW_STATISTICS

sys.dm_db_stats_histogram

<sensitivity_attributes max_rank="20" max_rank_desc="Medium"><sensitivity_attribute label="Confidential" label_id="bac40f0b-cd20-4e1b-8bb5-3a6dc74a7d85" information_type="Credentials" information_type_id="c64aba7b-3a3e-95b6-535d-3bc535da5a59" rank="20" rank_desc="Medium"/></sensitivity_attributes>

<sensitivity_attributes max_rank="20" max_rank_desc="Medium"><sensitivity_attribute label="Confidential - GDPR" label_id="7097d922-0870-4afb-b33b-1db32f3e6eed" information_type="Name" information_type_id="57845286-7598-22f5-9659-15b24aeb125e" rank="20" rank_desc="Medium"/></sensitivity_attributes>Data Anonymization

Applying data classifications to the database stores the information in metadata table and allows it to be retrieved. This thereby enables us to build a process to dynamically anonymize the identified tables and their columns.

Use data classified in sys.sensitivity_classifications to generate a series of update statements for obfuscating Production data for use in non-production environments. Read my blog post on Anonymization Process for details regarding this.